Present

Facts for Kids

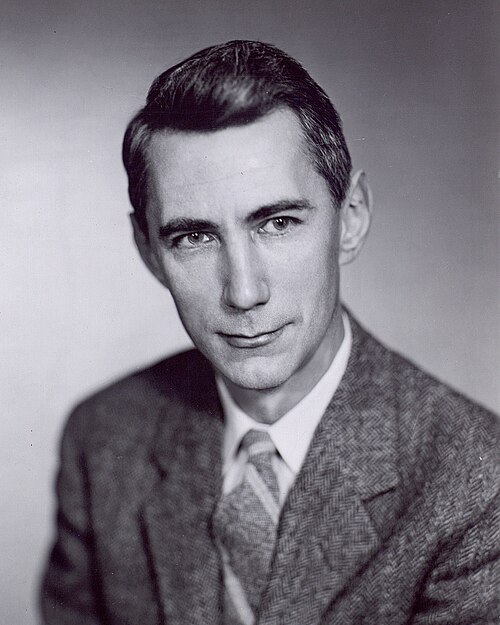

Claude Shannon was a pioneering American mathematician and electrical engineer known for founding information theory and revolutionizing digital communication.

Explore the internet with AstroSafe

Search safely, manage screen time, and remove ads and inappropriate content with the AstroSafe Browser.

Download

Inside this Article

Artificial Intelligence

University Of Michigan

Communication

Information

Technology

Video Game

Formula

Science

People

Did you know?

💡 Claude Shannon is often referred to as the 'father of information theory.'

🔢 He introduced the concept of the bit as the basic unit of information.

📡 Shannon's groundbreaking 1948 paper, 'A Mathematical Theory of Communication,' laid the foundation for digital circuit design theory.

🤖 He also made significant contributions to artificial intelligence and cryptography.

🎓 Shannon earned both his master's degree and Ph.D. from the Massachusetts Institute of Technology (MIT).

🚀 Beyond his theoretical work, he was an inventor who created a variety of devices, including a juggler and a machine that could play chess.

🏆 In 1966, he was awarded the National Medal of Science for his contributions to the field.

📊 Shannon's work is a cornerstone of modern telecommunications, influencing data compression and error correction.

🧠 He famously demonstrated a juggling robot as a fun display of his engineering skills.

⚙️ Shannon's legacy continues to impact fields such as computer science, artificial intelligence, and communication systems.

Show Less

Become a Creator with DIY.org

A safe online space featuring over 5,000 challenges to create, explore and learn in.

Learn more

Overview

Claude Shannon was an amazing American mathematician and engineer born on April 30, 1916, in Petoskey, Michigan. 🏞

️ He is often called the "father of information theory" because he helped us understand how information is sent, received, and stored, especially in computers and communication. 📡

Shannon made many cool discoveries that help technology today! He even had a playful side—he once built a working model of a rocket that could fly! 🚀

His ideas changed the way we think about communication and led to the development of the internet and much more. 🌐

️ He is often called the "father of information theory" because he helped us understand how information is sent, received, and stored, especially in computers and communication. 📡

Shannon made many cool discoveries that help technology today! He even had a playful side—he once built a working model of a rocket that could fly! 🚀

His ideas changed the way we think about communication and led to the development of the internet and much more. 🌐

Read Less

Shannon's Entropy

Entropy, in Shannon's terms, means the amount of uncertainty or surprise in information. 🤓

For example, if you flip a coin, there are two possible outcomes: heads or tails. That's low entropy! But if you have a box of different-colored balls, predicting which one you'll pull out is harder, creating higher entropy! 🎲

Shannon created a way to calculate this uncertainty using a formula that helps engineers design systems that reduce errors in communication. This concept is super useful in computer science and telecommunications! 📊

So next time you flip a coin, remember that you're playing with information entropy! 🪙

For example, if you flip a coin, there are two possible outcomes: heads or tails. That's low entropy! But if you have a box of different-colored balls, predicting which one you'll pull out is harder, creating higher entropy! 🎲

Shannon created a way to calculate this uncertainty using a formula that helps engineers design systems that reduce errors in communication. This concept is super useful in computer science and telecommunications! 📊

So next time you flip a coin, remember that you're playing with information entropy! 🪙

Read Less

Later Life and Legacy

In his later life, Claude Shannon continued to inspire others with his creative ideas and playful inventions! 🎉

He worked at MIT and continued to explore new scientific areas, like artificial intelligence and robotics. 🤖

He was both a brilliant thinker and an inventor who loved to build, even making a computer chess player! ♟

️ Shannon passed away on February 24, 2001, but his legacy lives on through technology that connects us today. 🌟

His work influences everything from smartphones to the internet. Without Claude Shannon, our world would look very different—more like a curious scientist's playground! ⚡

He worked at MIT and continued to explore new scientific areas, like artificial intelligence and robotics. 🤖

He was both a brilliant thinker and an inventor who loved to build, even making a computer chess player! ♟

️ Shannon passed away on February 24, 2001, but his legacy lives on through technology that connects us today. 🌟

His work influences everything from smartphones to the internet. Without Claude Shannon, our world would look very different—more like a curious scientist's playground! ⚡

Read Less

Shannon's Game Theory

Game theory is a way of understanding how people make choices and compete with one another. 🎲

Claude Shannon studied game theory and its connections with information! He looked at how strategies can affect the outcomes of games and decisions. For example, in a game of rock-paper-scissors, thinking about what your opponent might choose helps you decide your best move. 🤔🎉 Shannon's work has applications in economics, psychology, and even biology! His theories help us understand how people and machines interact, which is important for improving technology and communication. Go ahead, next time you're playing a game, think about those strategies! 🎮🧩

Claude Shannon studied game theory and its connections with information! He looked at how strategies can affect the outcomes of games and decisions. For example, in a game of rock-paper-scissors, thinking about what your opponent might choose helps you decide your best move. 🤔🎉 Shannon's work has applications in economics, psychology, and even biology! His theories help us understand how people and machines interact, which is important for improving technology and communication. Go ahead, next time you're playing a game, think about those strategies! 🎮🧩

Read Less

Early Life and Education

Claude Shannon loved learning from a young age! 📚

He grew up in a small town, and his parents encouraged his curiosity. 🧒✨ Shannon went to the University of Michigan, where he studied electrical engineering and mathematics. He graduated in 1936 and later earned a master’s degree from the Massachusetts Institute of Technology (MIT) in 1937. 🎓

At MIT, he explored how to use binary numbers (0s and 1s) to encode information. His early work laid the foundation for his future inventions. Shannon was a curious boy with a dream to make the world better through science! 🌟

He grew up in a small town, and his parents encouraged his curiosity. 🧒✨ Shannon went to the University of Michigan, where he studied electrical engineering and mathematics. He graduated in 1936 and later earned a master’s degree from the Massachusetts Institute of Technology (MIT) in 1937. 🎓

At MIT, he explored how to use binary numbers (0s and 1s) to encode information. His early work laid the foundation for his future inventions. Shannon was a curious boy with a dream to make the world better through science! 🌟

Read Less

Impact on Telecommunications

Claude Shannon's work greatly influenced telecommunications, which means how we communicate using technology! 📡

Thanks to his theories, communication systems became more effective. He helped engineers design better methods for sending voices and pictures over long distances, like in telephones and radio. 📞📻 Shannon’s ideas also led to the development of satellite communications, making it possible to connect people all over the world. 🌍💬 Without his contributions, things like video chatting, streaming, and online gaming might not even exist! Today, we rely on technology in so many ways—Shannon’s genius made all of that possible! 🌟

Thanks to his theories, communication systems became more effective. He helped engineers design better methods for sending voices and pictures over long distances, like in telephones and radio. 📞📻 Shannon’s ideas also led to the development of satellite communications, making it possible to connect people all over the world. 🌍💬 Without his contributions, things like video chatting, streaming, and online gaming might not even exist! Today, we rely on technology in so many ways—Shannon’s genius made all of that possible! 🌟

Read Less

Contributions to Cryptography

Claude Shannon wasn't just into information theory; he also made big contributions to cryptography! 🔐

Cryptography is the art of keeping information secret. During World War II, Shannon worked on ways to help protect military communications. He developed the "one-time pad," an unbreakable coding method using random keys to encrypt messages. This clever idea ensures that even if someone intercepts the message, they can't understand it without the key! 🗝

️ His innovations have influenced how we secure our online information today in banking and social media. 🛡

️ Shannon's work showed how science can keep our secrets safe!

Cryptography is the art of keeping information secret. During World War II, Shannon worked on ways to help protect military communications. He developed the "one-time pad," an unbreakable coding method using random keys to encrypt messages. This clever idea ensures that even if someone intercepts the message, they can't understand it without the key! 🗝

️ His innovations have influenced how we secure our online information today in banking and social media. 🛡

️ Shannon's work showed how science can keep our secrets safe!

Read Less

Mathematics and Information Theory

Claude Shannon introduced a revolutionary idea called "information theory" in 1948. 🤔

He used math to describe how much information can be sent through a channel, like a phone line! 📞

Shannon created a formula that shows how to measure and even optimize the transmission of information. His work helps us understand how to send messages without mistakes, which is super important for things like texting and video calls. 💬✨ The ideas he shared built the base for modern communication, including how computers send data smoothly! His work has influenced technology around the world! 🌍

He used math to describe how much information can be sent through a channel, like a phone line! 📞

Shannon created a formula that shows how to measure and even optimize the transmission of information. His work helps us understand how to send messages without mistakes, which is super important for things like texting and video calls. 💬✨ The ideas he shared built the base for modern communication, including how computers send data smoothly! His work has influenced technology around the world! 🌍

Read Less

Digital Circuits and Boolean Algebra

Did you know that Claude Shannon helped invent digital circuits? 💻

In his 1937 master's thesis, he used Boolean algebra to explain how electrical circuits can represent information. Boolean algebra uses only two values, true (1) and false (0), just like in computers! By showing how simple on-and-off switches could encode complex functions, he paved the way for the modern computer. 🖥

️ His ideas help computer engineers design faster and more efficient machines. Thanks to Shannon, when you play your favorite video game or browse the internet, it all works because of his groundbreaking work in digital technology! 🎮🌟

In his 1937 master's thesis, he used Boolean algebra to explain how electrical circuits can represent information. Boolean algebra uses only two values, true (1) and false (0), just like in computers! By showing how simple on-and-off switches could encode complex functions, he paved the way for the modern computer. 🖥

️ His ideas help computer engineers design faster and more efficient machines. Thanks to Shannon, when you play your favorite video game or browse the internet, it all works because of his groundbreaking work in digital technology! 🎮🌟

Read Less

Try your luck with the Claude Shannon Quiz.

Try this Claude Shannon quiz and see how many you score!

Q1

Question 1 of 10

Next

Explore More